Causal Incentives Working Group

We are a collection of researchers interested in using causal models to understand agents and their incentives, in order to design safe and fair AI algorithms. If you are interested in collaborating on any related problems, feel free to reach out to us.

Introduction

For an accessible overview of of our work, see our blogpost sequence Towards Causal Foundations of Safe AGI. It builds on our UAI 2023 tutorial (slides, video).

Papers

Incentives for Responsiveness, Instrumental Control and Impact journal version of AI:ACP, introducing impact incentives.

Ryan Carey, Eric Langlois, Chris van Merwijk, Shane Legg, Tom Everitt

Artificial Intelligence, 2025

General agents need world models shows that task-general agents need a predictive (not necessarily causal) model of the world.

Jonathan Richens, David Abel, Alexis Bellot, Tom Everitt

ICML, 2025

The Limits of Predicting Agents from Behaviour uses causal transportability to explore when we can trust black-box agents based on their past behaviour.

Alexis Bellot, Jonathan Richens, Tom Everitt

ICML, 2025

Evaluating the Goal-Directedness of Large Language Models Establishes a notion of goal-directedness for LLMs that is empirically predictive and consistent across tasks.

Tom Everitt, Cristina Garbacea, Alexis Bellot, Jonathan Richens, Henry Papadatos, Siméon Campos, Rohin Shah.

arXiv, 2025

Higher-Order Belief in Incomplete Information MAIDs Adds subjective beliefs, and beliefs about beliefs etc, to multi-agent influence diagrams.

Jack Foxabbott, Rohan Subramani, Francis Rhys Ward

AAMAS, 2025

Measuring Goal-directedness proposes MEG (Maximum Entropy Goal-directedness): a framework for measuring goal-directedness in causal models & MDPs.

Matt MacDermott, James Fox, Francesco Belardinelli, Tom Everitt

NeurIPS, 2024 (Spotlight)

Robust agents learn causal world models (tweet summary, slides) shows that a causal model is necessary for robust generalisation under distributional shifts.

Jon Richens, Tom Everitt.

ICLR, 2024 (Honorable mention outstanding paper award)

The Reasons that Agents Act: Intention and Instrumental Goals (tweet summary):

Formalises intent in causal models and connects it with a behavioural characterisation that can be applied to LLMs.

Francis Rhys Ward, Matt MacDermott, Francesco Belardinelli, Francesca Toni, Tom Everitt.

AAMAS, 2024

Characterising Decision Theories with Mechanised Causal Graphs:

Shows that mechanised causal graphs can be used to cleanly define different decision theories.

Matt MacDermott, Tom Everitt, Francesco Belardinelli

arXiv, 2023

On Imperfect Recall in Multi-Agent Influence Diagrams:

Extends the theory of multi-agent influence diagrams (and causal games) to cover imperfect recall, mixed policies, correlated equilibria, and complexity results.

James Fox, Matt MacDermott, Lewis Hammond, Paul Harrenstein, Alessandro Abate, Michael Wooldridge.

TARK, 2023 (Best Paper Award)

Honesty Is the Best Policy: Defining and Mitigating AI Deception:

Formal definition of intent and deception and graphical criteria. RL and LM experiments to illustrate.

Francis Rhys Ward, Tom Everitt, Francesco Belardinelli, Francesca Toni.

NeurIPS, 2023.

Human Control: Definitions and Algorithms:

We study definitions of human control, including variants of corrigibility and alignment, the assurances they offer for human autonomy, and the algorithms that can be used to obtain them.

Ryan Carey, Tom Everitt

UAI, 2023

Discovering Agents (summary):

A new causal definition of agency that allows us to discover whether an agent is present in a system, leading to better causal modelling of AI agents and their incentives.

Zachary Kenton, Ramana Kumar, Sebastian Farquhar, Jonathan Richens, Matt MacDermott, Tom Everitt

Artificial Intelligence Journal, 2023

Reasoning about Causality in Games (tweet thread):

Introduces (structural) causal games, a single modelling framework that allows for both causal and game-theoretic reasoning.

Lewis Hammond, James Fox, Tom Everitt, Ryan Carey, Alessandro Abate, Michael Wooldridge

Artificial Intelligence Journal, 2023

Counterfactual Harm:

Agents must have a causal understanding of the world, in order to robustly minimize harm across distributional shifts.

Jonathan G. Richens, Rory Beard, Daniel H. Thompson

Neurips, 2022

Path-Specific Objectives for Safer Agent Incentives:

How do you tell an ML system to optimise an objective, but not by any means? E.g. optimize user engagement without manipulating the user?

Sebastian Farquhar, Ryan Carey, Tom Everitt

AAAI-22

A Complete Criterion for Value of Information in Soluble Influence Diagrams:

Presents a complete graphical criterion for value of information in influence diagrams with more than one decision node, along with ID homomorphisms and trees of systems.

Chris van Merwijk*, Ryan Carey*, Tom Everitt

AAAI-22

Why Fair Labels Can Yield Unfair Predictions: Graphical Conditions for Introduced Unfairness:

When is unfairness incentivised? Perhaps surprisingly, unfairness can be incentivized even when labels are completely fair.

Carolyn Ashurst, Ryan Carey, Silvia Chiappa, Tom Everitt

AAAI-22

Agent Incentives: A Causal Perspective (AI:ACP) (summary): An agent’s incentives are largely determined by its causal context. This paper gives sound and complete graphical criteria for four incentive concepts: value of information, value of control, response incentives, and control incentives.

Tom Everitt*, Ryan Carey*, Eric Langlois*, Pedro A. Ortega, Shane Legg

AAAI-21

How RL Agents Behave When Their Actions Are Modified (summary): RL algorithms like Q-learning and SARSA make different causal assumptions about their environment. These assumptions determine how user interventions affect the learnt policy.

Eric Langlois, Tom Everitt

AAAI-21

Equilibrium Refinements for Multi-Agent Influence Diagrams: Theory and Practice (video, summary): Introduces a notion of subgames in multi-agent (causal) influence diagrams, alongside classic equilibrium refinements.

Lewis Hammond, James Fox, Tom Everitt, Alessandro Abate, Michael Wooldridge

AAMAS-21

Reward tampering problems and solutions in reinforcement learning: A causal influence diagram perspective (summary, summary 2): Analyzes various reward tampering (aka “wireheading”) problems with causal influence diagrams.

Tom Everitt, Marcus Hutter, Ramana Kumar, Victoria Krakovna

Synthese, 2021

PyCID: A Python Library for Causal Influence Diagrams (github): Describes our Python package for analyzing (multi-agent) causal influence diagrams.

James Fox, Tom Everitt, Ryan Carey, Eric Langlois, Alessandro Abate, Michael Wooldridge

SciPy, 2021

Modeling AGI safety frameworks with causal influence diagrams

Tom Everitt, Ramana Kumar, Victoria Krakovna, Shane Legg

IJCAI AI Safety Workshop, 2019

The Incentives that Shape Behavior (summary): Superseded by AI:ACP.

Ryan Carey*, Eric Langlois*, Tom Everitt, Shane Legg

Understanding Agent Incentives using Causal Influence Diagrams. Part I: Single Action Settings (summary): Superseded by AI:ACP.

Tom Everitt, Pedro A. Ortega, Elizabeth Barnes, Shane Legg

(* denotes equal contribution)

Software

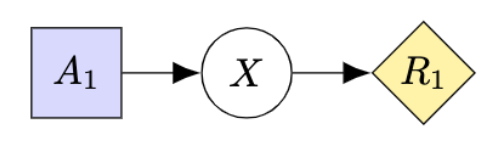

pycid: A Python implementation of causal influence diagrams, built on pgmpy.

CID Latex Package: A package for drawing professional looking influence diagrams, see tutorial.

Working group members

- Tom Everitt: Google DeepMind

- Ryan Carey: University of Oxford

- Lewis Hammond: University of Oxford, Cooperative AI Foundation

- James Fox: University of Oxford

- Jon Richens: Google DeepMind

- Francis Rhys Ward: Imperial College

- Matt MacDermott: Imperial College

- David Reber: University of Chicago

- Shreshth Malik: University of Oxford

- Milad Kazemi: University College London

- Sebastian Benthall: New York University

- Reuben Adams University College London

- Damiano Fornasiere University of Barcelona

- Pietro Greiner University of Barcelona

Learn more

Our work builds on both the causality and the AGI safety literature. Below are some pointers to background readings in each.

Causality

An accessible introduction to causality is The Book of Why. The next step is A Primer, which introduces the formal frameworks, along with plenty of exercises. The deepest and most detailed account is Causality, but it’s not an easy read (chapters 1, 3, and 7 are the most important).

AGI Safety

The book that initiated the field is Superintelligence. It remains a good read, though it has since been complemented by books such as Human Compatible, overview papers like Is Power-Seeking AI an Existential Risk, AGI safety literature review, Artificial Intelligence, Values and Alignment, and blog posts like Without specific countermeasures, and What Failure Looks like.

There are also good online courses on the topic, such as the AGI Safety Fundamentals Course and the ML Safety Course.

Getting involved

We are currently not meeting actively.